- 5 Posts

- 44 Comments

1·2 months ago

1·2 months agoThat’s going to change in the future with NPUs (neural processing units). They’re already being bundled with both regular CPUs (such as the Ryzen 8000 series) and mobile SoCs (such as the Snapdragon 8 Gen 3). The NPU included with the the SD8Gen3 for instance can run models like Llama 2 - something an average desktop would normally struggle with. Now this is only the 7B model mind you, so it’s a far cry from more powerful models like the 70B, but this will only improve in the future. Over the next few years, NPUs - and applications that take advantage of them - will be a completely normal thing, and it won’t require a household’s worth of energy. I mean, we’re already seeing various applications of it, eg in smartphone cameras, photo editing apps, digital assistants etc. The next would be I guess autocorrect and word prediction, and I for one can’t wait to ditch our current, crappy markov keyboards.

-

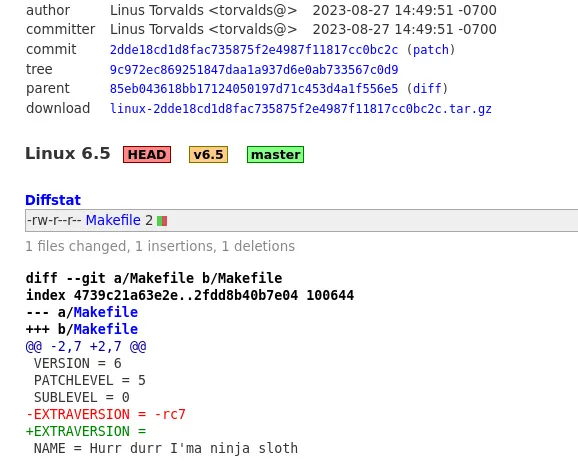

Summarising articles / extracting information / transforming it according to my needs. Everyone knows LLM-bssed summaries are great, but not many folks utilise them to their full extent. For instance, yesterday, Sony published a blog piece on how a bunch of games were discounted on the PlayStation store. This was like a really long list that I couldn’t be bothered reading, so I asked ChatGPT to display just the genres that I’m interested in, and sort them according to popularity. Another example is parsing changelogs for software releases, sometimes some of them are really long (and not sorted properly - maybe just a dump of commit messages), so I’d ask it to summarise the changes, maybe only show me new feature additions, or any breaking changes etc.

-

Translations. I find ChatGPT excellent at translating Asian languages - expecially all the esoteric terms used in badly-translated Chinese webcomics. I feed in the pinyin word and provide context, and ChatGPT tells me what it means in that context, and also provides alternate translations. This is a 100 times better than just using Google Translate or whatever dumb dictionary-based translator, because context is everything in Asian languages.

-

4·9 months ago

4·9 months agoIt’s not in the opposite order, it’s just flipped around in the photo lol.

9·9 months ago

9·9 months agoLooks like this might work: https://www.aliexpress.com/item/1005005094002506.html

4·9 months ago

4·9 months agodeleted by creator

4·9 months ago

4·9 months agoIf it’s just for personal use, why not just use Tasker? Judging by what you’ve written, it could be easily done without needing any Android coding experience.

4·10 months ago

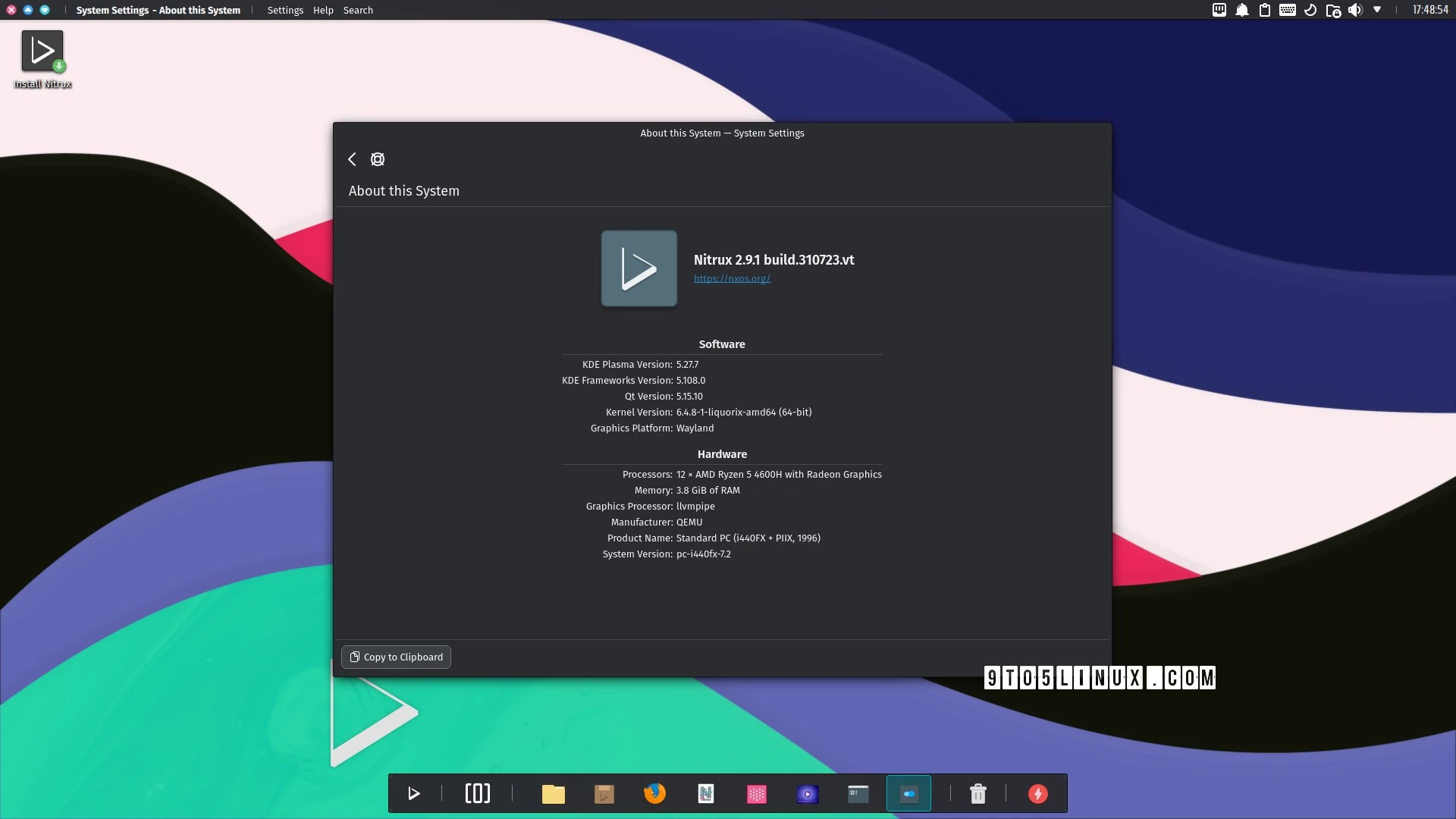

4·10 months agoTo be fair, that’s the case with Linux and laptops in general right? Unless you’ve got a mainstream/popular model (or a brand known to work well with, or officially supports Linux), issues like sound, battery life and even suspend, wifi etc are fairly common. Which is why one of the most common Linux questions (besides “which distro”) is “which laptop”.

10·10 months ago

10·10 months agoDepends on the hardware. If it’s an x86 then that’s most certainly a yes, if it’s an ARM then YMMV.

1·10 months ago

1·10 months agoFrom @SuperIce@lemmy.world:

If the PoS supports tokens, it’ll use unique tokens for each payment. If the PoS doesn’t support tokens, the phone has a virtual credit card number linked to the real one, so if it does get stolen, you can just remove the card from your Google Wallet to deactivate it. Your real card number is never exposed.

Even then, credit card numbers on their own aren’t that useful anymore. Any online payment needs the CVC and PoS devices usually require chip or tap cards, which don’t use the number. On top of that, credit card companies have purchase price restrictions when using swipe because of the security risks vs chip (which is why most PoS devices don’t support swipe anymore).

1·10 months ago

1·10 months agodeleted by creator

1·10 months ago

1·10 months agoThere are plenty of HDMI switches or splitters out there that support audio extraction, just use one of them to sit between your monitor. Like this one: https://www.amazon.ca/gp/product/B00XJITK7E

1·10 months ago

1·10 months ago??? The output is provided by whatever box you’re connecting to the monitor - set-top box, Android TV, Apple TV etc.

2·10 months ago

2·10 months agoAs I mentioned earlier, use a soundbar or dedicated speakers (most TV speakers suck anyways). Also, for a reasonably priced monitors, look for monitors marketed as “commercial displays” - they’re generally the same price or even cheaper than a similar spec’d TV.

3·10 months ago

3·10 months agoYes, Viewsonic for instance is one company that makes them. Although, they’re typically advertised as a “commercial LED display” or something like that. Basically look for “display” instead of “TV”.

12·10 months ago

12·10 months agoJust get a monitor. The only real difference between a monitor and a TV these says is the lack of a speaker, and “smart” stuff. But TV speakers suck anyways so you’d be better off using a soundbar regardless.

5·10 months ago

5·10 months agoI did the TV -> projector swap last year, got myself a 4K projector that sits above my bed and projects a massive 100" image on the wall opposite my bed, and it’s awesome. I’ve got my PS5 and Switch hooked to it, and I’m currently living the dream of being able to game and watch movies on a giant screen, all from the comfort of my bed. Some games really shine on such a screen and you see them in a new light, like TotK, Horizon series, Spiderman etc and it’s 100% worth the switch, IMO.

Now I also have a regular monitor - a nice low latency QHD 16:10 monitor with HDR, hooked up to my PC, which also uses a 6600 XT btw. Main reason I use this setup is for productivity, running some PC games that don’t have console equivalents, plus the colors look much nicer compared to my projector. Maybe if I bought a laser projector and had one of those special ALR screens I could get nicer colors, but all that is way beyond my budget. Although these days I’m not on my desktop as much as I used to be (I also have a Ryzen 6000 series laptop that I game on btw), I still like my desktop because of the flexibility and upgradability. I also explored the option of switching to a cloud-first setup and ditching my rig, back when I wanted to upgrade my PC and we had all those supply chain issues during Covid, but in the end, cloud gaming didn’t really work out for me. In fact after exploring all the cloud options, I’ve been kind of put off by cloud computing in general - at least, the public clouds being offered by the likes of Amazon and Microsoft - they’re just in it to squeeze you dry, and take control away from you, and I don’t like that one bit. If I were to lean towards cloud anything, it be rolling my own, maybe using something like a Linode VM with a GPU, but the pricing doesn’t justify it if you’re looking any anything beyond casual usage. And that’s one of the things I like about PC, I could have it running 24x7 if I wanted to and not worry about getting a $200 bill at the end of the month, like I got with Azure, because scummy Microsoft didn’t explain anywhere that you’d be paying for bastion even if the VM was fully powered off…

Anyways, back to the topic of CPUs, I don’t really think we’re at the cusp of any re-imagining, what we’ve been seeing is just gradual and natural improvements, maybe the PC taking inspiration from the mobile world. I haven’t seen anything revolutionary yet, it’s all been evolutionary. At the most, I think we’d see more ARM-like models, like the integrated RAM you mentioned, more SoC/integrated solutions, maybe AI/ML cores bring the new thing to look for an a CPU, maybe ARM itself making more inroads towards the desktop and laptop space, since Apple have shown that you can use ARM for mainstream computing.

On the revolutionary side, the things I’ve been reading about are stuff like quantum CPUs or DNA computers, but these are still very expiremental, with very niche use-cases. In the future I imagine we might have something like a hybrid semi-organic computer, with a literal brain that forms organic neural networks and evolves as per requirements, I think that would be truly revolutionary, but we’re not there yet, not even at the cusp of it. Everything else that I’ve seen from the likes of Intel and AMD, have just been evolutionary.

11·10 months ago

11·10 months agoTo be fair, on modern systems it does open quickly in spite of it’s size (probably because most of the shared libraries for UWP apps are already loaded in memory). And at the moment, the new Notepad doesn’t offer any additional features which are common in heavy duty editors, so the “bloat” is mostly from an engineering standpoint. Well, I guess with the recent unwanted addition of Bing search, we’re now starting to see signs of actual user-facing bloat.

You could make yours a Geocaching app with fog-of-war. They’ve got an API for third-party apps too, so it should be easy enough to develop, and actually fun to play.

If you’re feeling ambitious, add some RTS elements like from Age of Empires - eg if you add someone as your ally, you can share their line of sight. Finding certain types of caches, or POIs, increases your resources (gold/stone/food/wood - maybe visiting Pizza Hut gives you extra food lol). And with these resources, you could build virtual structures like castles etc. Other players could spend resources to take it down (but they need to be physically at that location), and you could spend resources to defend your building.

Kinda like Ingress basically, but with medieval/RTS elements and geocaching thrown into the mix. How’s that for a challenge? :)