If this is the way to superintelligence, it remains a bizarre one. “This is back to a million monkeys typing for a million years generating the works of Shakespeare,” Emily Bender told me. But OpenAI’s technology effectively crunches those years down to seconds. A company blog boasts that an o1 model scored better than most humans on a recent coding test that allowed participants to submit 50 possible solutions to each problem—but only when o1 was allowed 10,000 submissions instead. No human could come up with that many possibilities in a reasonable length of time, which is exactly the point. To OpenAI, unlimited time and resources are an advantage that its hardware-grounded models have over biology. Not even two weeks after the launch of the o1 preview, the start-up presented plans to build data centers that would each require the power generated by approximately five large nuclear reactors, enough for almost 3 million homes.

Full article:

This week, openai launchedwhat its chief executive, Sam Altman, called “the smartest model in the world”—a generative-AI program whose capabilities are supposedly far greater, and more closely approximate how humans think, than those of any such software preceding it. The start-up has been building toward this moment since September 12, a day that, in OpenAI’s telling, set the world on a new path toward superintelligence.

That was when the company previewed early versions of a series of AI models, known as o1, constructed with novel methods that the start-up believes will propel its programs to unseen heights. Mark Chen, then OpenAI’s vice president of research, told me a few days later that o1 is fundamentally different from the standard ChatGPT because it can “reason,” a hallmark of human intelligence. Shortly thereafter, Altman pronounced “the dawn of the Intelligence Age,” in which AI helps humankind fix the climate and colonize space. As of yesterday afternoon, the start-up has released the first complete version of o1, with fully fledged reasoning powers, to the public. (The Atlantic recently entered into a corporate partnership with OpenAI.)

On the surface, the start-up’s latest rhetoric sounds just like hype the company has built its $157 billion valuation on. Nobody on the outside knows exactly how OpenAI makes its chatbot technology, and o1 is its most secretive release yet. The mystique draws interest and investment. “It’s a magic trick,” Emily M. Bender, a computational linguist at the University of Washington and prominent critic of the AI industry, recently told me. An average user of o1 might not notice much of a difference between it and the default models powering ChatGPT, such as GPT-4o, another supposedly major update released in May. Although OpenAI marketed that product by invoking its lofty mission—“advancing AI technology and ensuring it is accessible and beneficial to everyone,” as though chatbots were medicine or food—GPT-4o hardly transformed the world.

But with o1, something has shifted. Several independent researchers, while less ecstatic, told me that the program is a notable departure from older models, representing “a completely different ballgame” and “genuine improvement.” Even if these models’ capacities prove not much greater than their predecessors’, the stakes for OpenAI are. The company has recently dealt with a wave of controversies and high-profile departures, and model improvement in the AI industry overall has slowed. Products from different companies have become indistinguishable—ChatGPT has much in common with Anthropic’s Claude, Google’s Gemini, xAI’s Grok—and firms are under mounting pressure to justify the technology’s tremendous costs. Every competitor is scrambling to figure out new ways to advance their products.

Over the past several months, I’ve been trying to discern how OpenAI perceives the future of generative AI. Stretching back to this spring, when OpenAI was eager to promote its efforts around so-called multimodal AI, which works across text, images, and other types of media, I’ve had multiple conversations with OpenAI employees, conducted interviews with external computer and cognitive scientists, and pored over the start-up’s research and announcements. The release of o1, in particular, has provided the clearest glimpse yet at what sort of synthetic “intelligence” the start-up and companies following its lead believe they are building.

The company has been unusually direct that the o1 series is the future: Chen, who has since been promoted to senior vice president of research, told me that OpenAI is now focused on this “new paradigm,” and Altman later wrote that the company is “prioritizing” o1 and its successors. The company believes, or wants its users and investors to believe, that it has found some fresh magic. The GPT era is giving way to the reasoning era.

Last spring, i met mark chen in the renovated mayonnaise factory that now houses OpenAI’s San Francisco headquarters. We had first spoken a few weeks earlier, over Zoom. At the time, he led a team tasked with tearing down “the big roadblocks” standing between OpenAI and artificial general intelligence—a technology smart enough to match or exceed humanity’s brainpower. I wanted to ask him about an idea that had been a driving force behind the entire generative-AI revolution up to that point: the power of prediction.

The large language models powering ChatGPT and other such chatbots “learn” by ingesting unfathomable volumes of text, determining statistical relationships between words and phrases, and using those patterns to predict what word is most likely to come next in a sentence. These programs have improved as they’ve grown—taking on more training data, more computer processors, more electricity—and the most advanced, such as GPT-4o, are now able to draft work memos and write short stories, solve puzzles and summarize spreadsheets. Researchers have extended the premise beyond text: Today’s AI models also predict the grid of adjacent colors that cohere into an image, or the series of frames that blur into a film.

The claim is not just that prediction yields useful products. Chen claims that “prediction leads to understanding”—that to complete a story or paint a portrait, an AI model actually has to discern something fundamental about plot and personality, facial expressions and color theory. Chen noted that a program he designed a few years ago to predict the next pixel in a gridwas able to distinguish dogs, cats, planes, and other sorts of objects. Even earlier, a program that OpenAI trained to predict text in Amazon reviews was able to determine whether a review was positive or negative.

Today’s state-of-the-art models seem to have networks of code that consistently correspond to certain topics, ideas, or entities. In one now-famous example, Anthropic shared research showing that an advanced version of its large language model, Claude, had formed such a network related to the Golden Gate Bridge. That research further suggested that AI models can develop an internal representation of such concepts, and organize their internal “neurons” accordingly—a step that seems to go beyond mere pattern recognition. Claude had a combination of “neurons” that would light up similarly in response to descriptions, mentions, and images of the San Francisco landmark. “This is why everyone’s so bullish on prediction,” Chen told me: In mapping the relationships between words and images, and then forecasting what should logically follow in a sequence of text or pixels, generative AI seems to have demonstrated the ability to understand content.

The pinnacle of the prediction hypothesis might be Sora, a video-generating model that OpenAI announced in February and which conjures clips, more or less, by predicting and outputting a sequence of frames. Bill Peebles and Tim Brooks, Sora’s lead researchers, told me that they hope Sora will create realistic videos by simulating environments and the people moving through them. (Brooks has since left to work on video-generating models at Google DeepMind.) For instance, producing a video of a soccer match might require not just rendering a ball bouncing off cleats, but developing models of physics, tactics, and players’ thought processes. “As long as you can get every piece of information in the world into these models, that should be sufficient for them to build models of physics, for them to learn how to reason like humans,” Peebles told me. Prediction would thus give rise to intelligence. More pragmatically, multimodality may also be simply about the pursuit of data—expanding from all the text on the web to all the photos and videos, as well.

“In OpenAI’s early tests, scaling o1 showed diminishing returns: Linear improvements on a challenging math exam required exponentially growing computing power.”

Sounds like most other drugs, too.

The GPT Era Is Already Ending

Had it begun? Alls I saw was a frenzy of idiot investment cheered on shamelessly by hypocritical hypemen.

Seriously, I tried using ChatGPT for my work sooo often and it never gave me results I could work with. I was promised a tool that replaces me, yet it does only suggest me things that are not working correctly.

Oh, I saw a ton of search results feed me to worthless ai generated vomit. It definitely changed things.

How is it useful to type millions of solutions out that are wrong to come up with the right one? That only works on a research project when youre searching for patterns. If you are trying to code, it needs to be right the first time every time it’s run, especially if it’s in a production environment.

It’s not.

But lying lets them defraud more investors.

TDD, Test Driven Development. A human writes requirements, with help of the AI he/she derrives tests from the requirements. AI writes code until the tests don’t fail.

Well actually there’s ways to automate quality assurance.

If a programmer reasonably knew that one of these 10,000 files was the “correct” code, they could pull out quality assurance tests and find that code pretty dang easily, all things considered.

Those tests would eliminate most of the 9,999 wrong ones, and then the QA person could look through the remaining ones by hand. Like a capcha for programming code.

The power usage still makes this a ridiculous solution.

That seems like an awful solution. Writing a QA test for every tiny thing I want to do is going to add far more work to the task. This would increase the workload, not shorten it.

We already have to do that as humans in many industries like automobile, aviation, medicine, etc.

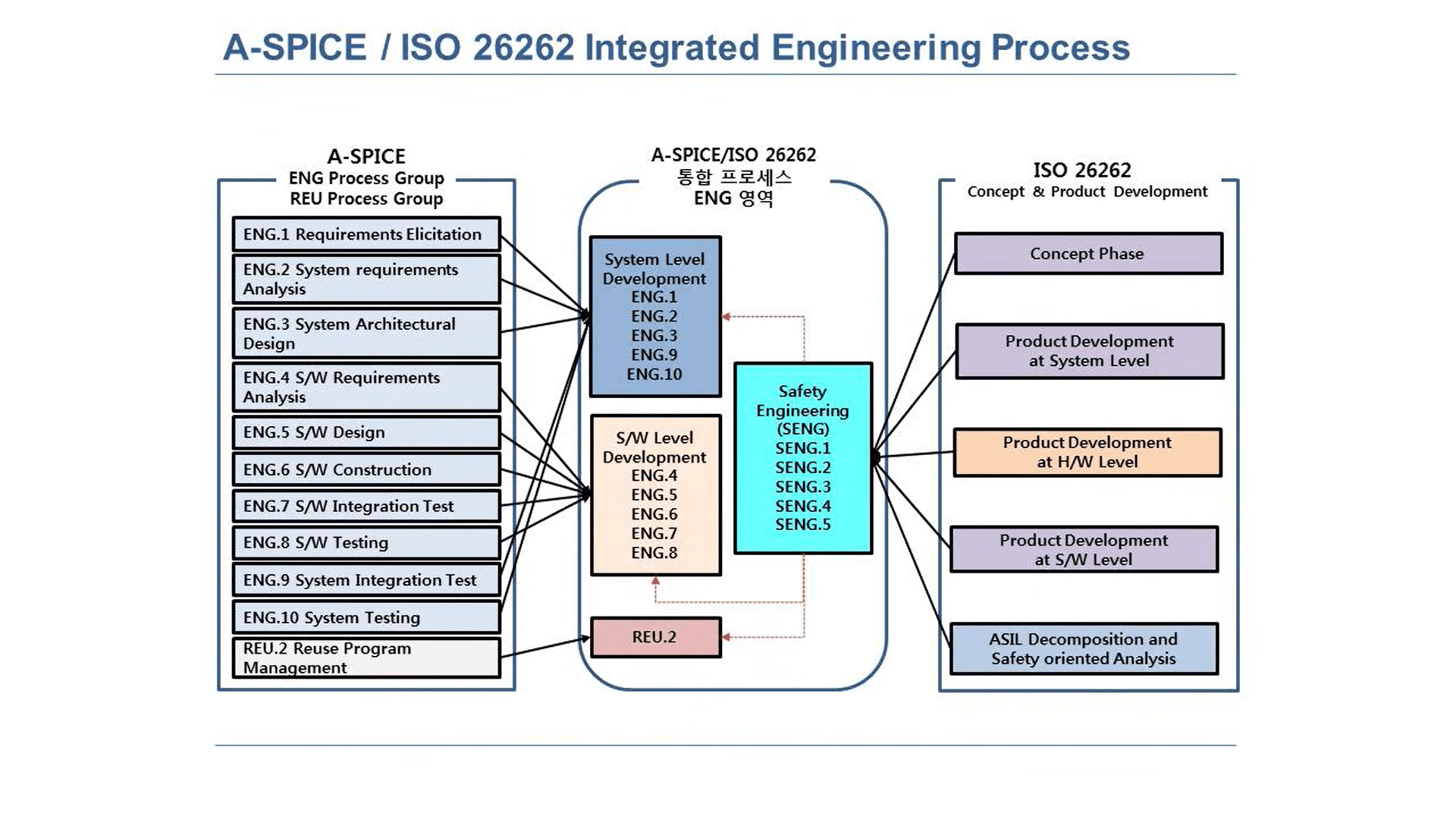

We have several layers of tests:

- Unit test

- Component test

- Integration / API test

- Subsystem test

- System test

On each level we test the code against the requirements and architecture documentation. It’s a huge amount of work.

In automotive we have several standard processes which need to be followed during development like ASPICE and ISO26262:

I’ve worked in both automotive, and the aerospace industry. A unit test is not the same thing as creating a QA script to go through millions of lines of code generated by an AI. Thats such an asinine suggestion. Youve clearly not worked on any practical software application or you’d know this is utter hogwash.

I think you (or I) misunderstand something. You have a test for a small well defined unit like a C function. und let the AI generate code until the test passes. The unit test is binary, either it passes or not. The unit test only looks at the result after running the unit with different inputs, it does not “go through millions of lines of code”.

And you keep doing that for every unit.

The writing of the code is a fairly mechanical thing at this point because the design has been done in detail before by the human.

How often have you ever written a piece of code that is super well defined? I have very little guidance on what code look like and so when I start working on a project. This is the equivalent of the spherical chicken in a vacuum problem in physics classes. It’s not a real case you’ll ever see.

And in cases where it is a short well defined function, just write the function. You’ll be done before the AI finishes.

This sounds pretty typical for a hobbyist project but is not the case in many industries, especially regulated ones. It is not uncommon to have engineers whose entire job is reading specifications and implementing them. In those cases, it’s often the case that you already have compliance tests that can be used as a starting point for your public interfaces. You’ll need to supplement those compliance tests with lower level tests specific to your implementation.

Many people write tests before writing code. This is common and called Test Driven Development. Having an AI bruteforce your unit tests is actually already the basis for a “programming language” that I saw on hackernews a week or so ago.

I despise most AI applications, and this is definitely one. However it’s not some foreign concept impossible in reality:

https://wonderwhy-er.medium.com/ai-tdd-you-write-tests-ai-generates-code-c8ad41813c0a

The unit test is binary, either it passes or not.

For that use case yes, but when you have unpredictable code, you would need to write way more just to do sanity checks for behaviour you haven’t even thought of.

As in, using AI might introduce waaay more edge cases.

If you first have to write comprehensive unit/integration tests, then have a model spray code at them until it passes, that isn’t useful. If you spend that much time writing perfect tests, you’ve already written probably twice the code of just the solution and reasonable tests.

Also you have an unmaintainable codebase that could be a hairball of different code snippets slapped together with dubious copyright.

Until they hit real AGI this is just fancy auto complete. With the hype they may dissuade a whole generation of software engineers picking a career today. If they don’t actually make it to AGI it will take a long time to recover and humans who actually know how to fix AI slop will make bank.

It’s a great article IMO, worth the read.

But :

“This is back to a million monkeys typing for a million years generating the works of Shakespeare,”

This is such a stupid analogy, the chances for said monkeys to just match a single page any full page accidentally is so slim, it’s practically zero.

To just type a simple word like “stupid” which is a 6 letter word, and there are 25⁶ combinations of letters to write it, which is 244140625 combinations for that single simple word!

A page has about 2000 letters = 7,58607870346737857223e+2795 combinations. And that’s disregarding punctuation and capital letters and special charecters and numbers.

A million monkeys times a million years times 365 days times 24 hours times 60 minutes times 60 seconds times 10 random typos per second is only 315360000000000000000 or 3.15e+20 combinations assuming none are repaeated. That’s only 21 digits, making it 2775 digits short of creating a single page even once.I’m so sick of seeing this analogy, because it is missing the point by an insane margin. It is extremely misleading, and completely misrepresenting getting something very complex right by chance.

To generate a work of Shakespeare by chance is impossible in the lifespan of this universe. The mathematical likelihood is so staggeringly low that it’s considered impossible by AFAIK any scientific and mathematical standard.

the actual analog isn’t a million monkeys. you only need one monkey. but it’s for an infinite amount of time. the probability isn’t practically zero, it’s one. that’s how infinity works. not only will it happen, but it will happen again, infinitely many times.

That’s not true. Something can be infinite and still not contain every possibility. This is a common misconceptoin.

For instance, consider an infinite series of numbers created by adding an additional “1” to the end of the previous number.

So we can start with 1. The next term is 11, followed by 111, then 1111, etc. The series is infinite since we can keep the pattern going forever.

However at no point will you ever see a “2” in the sequence. The infinite series does not contain every possible digit.

Anything with a nonzero probability will happen infinitely many times. The complete works of Shakespeare consist of 5,132,954 characters, 78 distinct ones. 1/(78^5132954 ) is an incomprehensibly tiny number, millions of zeroes after the decimal, but it is not zero. So the probability of it happening after infinitely many trials is 1. lim(1-(1-P)^n ) as n approaches infinity is 1 for any nonzero P.

An outcome that you’d never see would be a character that isn’t on the keyboard.

why do you keep changing the parameters? yeah, if you exclude the possibility of something happening it won’t happen. duh?

that’s not what’s happening in the infinite monkey theorem. it’s random key presses. that means every character has an equal chance of being pressed.

no one said the monkey would eventually start painting. or even type arabic words. it has a typewriter, presumably an English one. so the results will include every possible string of characters ever.

it’s not a common misconception, you just don’t know what the theorem says at all.

so the results will include every possible string of characters ever.

That’s just not true. One monkey could spend eternity pressing “a”. It does’t matter that he does it infinitely. He will never type a sentence.

If the keystrokes are random that is just as likely as any other output.

Being infinite does not guarantee every possible outcome.

Any possibility, no matter how small, becomes a certainty when dealing with infinity. You seem to fundamentally misunderstand this.

no. you don’t understand infinity, and you don’t understand probability.

if every keystroke is just as likely as any other keystroke, then each of them will be pressed an infinite number of times. that’s what just as likely means. that’s how random works.

if the monkey could press a for an eternity, then by definition it’s not as likely as any other keystroke. you’re again changing the parameters to a monkey whose probability of pressing a is 1 and every other key is 0. that’s what you’re saying means.

for a monkey that presses the keys randomly, which means the probability of each key is equal, every string of characters will be typed. you can find the letter a typed a million times consecutively, and a billion times and a quadrillion times. not only will you find any number of consecutive keystrokes of every letter, but you will find it repeated an infinite number of times throughout.

being infinite does guarantee every possible outcome. what you’re ruling out from infinity is literally impossible by definition.

if you exclude the possibility of something happening it won’t happen

That’s exactly my point. Infinity can be constrained. It can be infinite yet also limited. If we can exclude something from infinity then we have shown that an infinite set does NOT necessarily include everything.

this has nothing to do with the matter

This is actually a way dumber argument than you think you’ve made.

The original statement was that if something is infinite it must contain all possibilities. I showed one of many examples that do not, therefore the statement is not true. It’s a common misconception.

Please use your big boy words to reply instead of calling something “dumb” for not understanding.

Infinite monkeys and infinite time is equally stupid, because obviously you can’t have either, for the simple reason that the universe is finite.

And apart from that, it’s stupid because if you use an infinite random, EVERYTHING is contained in it!I’m sorry it just annoys the hell out of me, because it’s a thought experiment, and it’s stupid to use this as an analogy or example to describe anything in the real world.

You wouldn’t need infinite time if you had infinite monkeys.

An infinite number of them would produce it on the very first try!

You wouldn’t need infinite time if you had infinite monkeys.

Obviously, but as I wrote BOTH are impossible, so it’s irrelevant. I just didn’t think I’d have to explain WHY infinite monkeys is impossible, while some might think the universe is infinite also in time, which it is not.

I also already wrote that if you have an infinite string everything is contained in it.

But even with infinite moneys it’s not instant, because technically each monkey needs to finish a page.But I understand what you mean, and that’s exactly why the theorem is so stupid IMO. You could also have 1 monkey infinite time.

But both are still impossible.When I say it’s stupid, I don’t mean as a thought experiment which is the purpose of it. The stupid part is when people think they can use it as an analogy or example to describe something

It’s a theorem. It’s theoretical. This is like complaining about the 20 watermelon example being unrealistic: that’s not what it is about.

Don’t look for statistical precision in analogies. That’s why it’s called an analogy, not a calculation.

The quote is misquoting the analogy. It is an infinite number of monkeys.

The point of the analogy is about randomness and infinity. Any page of gibberish is equally as likely as a word perfect page of Shakespeare given equal weighting to the entry if characters. There are factors introduced with the behaviours of monkeys and placement of keys, but I don’t think that is the point of the analogy.

In the meantime weasel programs are very effective, and a better, if less known metaphor.

Sadly the monkeys thought experiment is a much more well known example.

Irrelevant nerd thought, back in the early nineties, my game development company was Monkey Mindworks based on a joke our (one) programmer made about his method of typing gibberish into the editor and then clearing the parts that didn’t resemble C# code.

C# didn’t exist in the early 90s, perhaps you’re thinking of another language?

Obligatory: 95% of paint splatters are valid perl programs

a million monkeys typing for a million years generating the works of Shakespeare

FFS, it’s one monkey and infinite years. This is the second time I’ve seen someone make this mistake in an AI article in the past month or so.

I always thought it was a small team, not millions. But yeah, one monkey with infinite time makes sense.

The whole point is that one of the terms has to be infinite. But it also works with infinite number of monkeys, one will almost surely start typing Hamlet right away.

The interesting part is that has already happened, since an ape already typed Hamlet, we call him Shakespeare. But at the same time, monkeys aren’t random letter generators, they are very intentional and conscious beings and not truly random at all.

one will almost surely start typing Hamlet right away

This is guaranteed with infinite monkeys. In fact, they will begin typing every single document to have ever existed, along with every document that will exist, right from the start. Infinity is very, very large.

This is guaranteed with infinite monkeys.

no, it is not. the chance of it happening will be really close to 100%, not 100% though. there is still small chance that all of the apes will start writing collected philosophical work of donald trump 😂

There’s 100% chance that all of Shakespeare’s and all of Trump’s writings will be started immediately with infinite monkeys. All of every writing past, present, and future will be immediately started (also, in every language assuming they have access to infinite keyboards of other spelling systems). There are infinite monkeys, if one gets it wrong there infinite chances to get it right. One monkey will even write your entire biography, including events that have yet to happen, with perfect accuracy. Another will have written a full transcript of your internal monologue. Literally every single possible combination of letters/words will be written by infinite monkeys.

No, not how it works

Care to elaborate?